Facebook said it would take down posts mocking George Floyd’s death and wouldn’t allow false claims that arsonists affiliated with antifa were behind wildfires in the Western U.S. nor would it allow praise for the man who shot people at a BLM protest in Kenosha, Wis.

Photo: From left: AP; Reuters; Zuma Press

Facebook Inc. this year has made a flurry of new rules designed to improve the discourse on its platforms. When users report content that breaks those rules, a test by The Wall Street Journal found, the company often fails to enforce them.

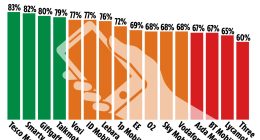

Facebook allows all users to flag content for review if they think it doesn’t belong on the platform. When the Journal reported more than 150 pieces of content that Facebook later confirmed violated its rules, the company’s review system allowed the material—some depicting or praising grisly violence—to stand more than three-quarters of the time.

Facebook’s errors blocking content in the Journal’s test don’t reflect the overall accuracy of its content-moderation system, said Sarah Pollack, a company spokeswoman. To moderate the more than 100 billion pieces of content posted each day to the Facebook platform, the company both reviews user reports and actively screens content using automated tools, Ms. Pollack said.

“Our priority is removing content based on severity and the potential for it going viral,” she said.

Amid a contentious election cycle and civil unrest, the social network has made new rules prohibiting false claims that arsonists affiliated with the far-left antifascist protest movement known as antifa were behind catastrophic wildfires in the Western U.S. and banning celebration of the ambush shooting of two Los Angeles deputies. Before that, the company said it wouldn’t allow praise for the shooting of three people at a Black Lives Matter protest in Kenosha, Wis. and pledged to take down items mocking George Floyd, whose death set off nationwide protests against police brutality.

Last week, the company announced several measures related to the Nov. 3 presidential election, including a ban on any posts that contain militarized language related to poll-watching operations. On Monday, Chief Executive Mark Zuckerberg said Facebook is banning Holocaust denial on the platform, enhancing the more limited constraints on such content previously in place, because of rising anti-Semitism.

A helicopter carried water last month to the Brattain fire in Fremont National Forest in Paisley, Ore.

Photo: adrees latif/Reuters

Facebook’s content moderation gained renewed attention Wednesday when the company limited online sharing of New York Post articles about the son of Democratic presidential nominee Joe Biden, saying it needed guidance from third-party fact-checkers who routinely vet content on the platform.

On a platform with 1.8 billion daily users, however, making a rule banning content doesn’t mean that content always disappears from Facebook.

“Facebook announces a lot of policy statements that sound great on paper, but there are serious concerns with their ability or willingness to enforce the rules as written,” said Evelyn Douek, a Harvard University lecturer and researcher at the Berkman Klein Center for Internet & Society who studies social-media companies’ efforts to regulate their users’ behavior.

Over several days in late September, the Journal flagged 276 posts, memes and comments that appeared to break Facebook rules against the promotion of violence and dangerous misinformation.

Facebook took down 32 posts the Journal reported, leaving up false posts about a left-wing arson conspiracy, jokes about the victims of the Kenosha violence imposed over grisly images of their wounds and comments wishing death or lifelong disabilities for Los Angeles sheriff’s deputies who survived an unprovoked shooting.

“We know this is not what you wanted, and we thought it might help if we explain how the review process works,” read Facebook’s standardized response to the posts submitted by the Journal. “Our technology reviewed your report and, ultimately, we decided not to take the content down.”

When the Journal contacted Facebook to review the more than 240 posts that the company’s moderation system had deemed allowable, the company ultimately concluded that more than half “should have been removed for violating our policies,” Ms. Pollack said. The company later removed many of those posts.

Because Facebook’s artificial-intelligence systems are increasingly geared toward identifying content that has the potential to go viral, many of the items the Journal reported—such as comments on news articles or posts in private groups that weren’t currently receiving a large volume of views—would have been given low priority for review, Ms. Pollack said.

The decisions highlight both the difficulty Facebook faces in enforcing its policies and the fine distinctions the company makes in defining what content is allowed and what breaks its rules. For example, Facebook’s policy against denigrating the victims of tragedies has an exception for statements regarding the victims’ criminal histories, even if those statements are false.

Mr. Zuckerberg has warned of risks that the U.S. election could lead to street violence, and announced a series of measures to stop the spread of misinformation and content that incites any kind of violence. The company said it has removed more than 120,000 pieces of content for violating voter-interference policies and displayed warnings on more than 150 million items that had been deemed false by Facebook’s fact-checking partners.

Facebook also faces pressure from users who complain that its content policies are too strict and limit public discourse. In leaked recordings of internal meetings published by the Verge, Mr. Zuckerberg told employees that the most common criticism from users is that the company removes too many posts, which many of those users perceive as a sign of bias against conservative views.

Facebook’s content-moderation system relies on both user reports and an automated detection system that seeks to instantly delete violative content or flag potential violations for human review. Facebook algorithms then give priority to what content gets reviewed by its thousands of human moderators, generally contract employees.

Ms. Pollack, the Facebook spokeswoman, said that coronavirus-related business disruptions and improvements to its AI systems have led the company to shift resources toward automated reviews of posts and away from individual users’ reports.

A George Floyd mural in Minneapolis.

Photo: Bryan Smith/Zuma Press

In an August blog post, Facebook said its AI could detect content violations “often with greater accuracy than reports from users” and “better give priority to the most impactful work for our review teams.”

The Journal’s test found Facebook didn’t always handle user reports as quickly as promised. Around half the items the Journal flagged were reviewed within 24 hours, the time frame in which Facebook’s system initially said in its automated response messages that it would provide an answer. Some—including posts reported as inciting violence or promoting terrorism—remained outstanding far longer, with a few still awaiting a decision more than two weeks later.

SHARE YOUR THOUGHTS

Do you think Facebook can successfully tackle misinformation around the coming U.S. election? Why or why not? Join the conversation below.

In a conference call with reporters last week, Guy Rosen, Facebook’s vice president of integrity, declined to say how long, on average, Facebook takes to review user-reported content.

The reporting system offers users a chance to appeal the company’s decision. When the Journal filed such appeals, Facebook a day later sent automated emails stating that Covid-19 resource limitations meant “we can’t review your report a second time.”

The message suggested hiding or blocking “upsetting” content in lieu of making an appeal, and said that the report would be used to train the platform’s algorithms.

Write to Jeff Horwitz at [email protected]

Copyright ©2020 Dow Jones & Company, Inc. All Rights Reserved. 87990cbe856818d5eddac44c7b1cdeb8