User reports of violent content on Facebook jumped more than 10-fold in the hours before the assault on the Capitol, internal documents show.

Photo: Jose Luis Magana/Associated Press

As footage of a pro-Trump mob ransacking the U.S. Capitol streamed from Washington, D.C., last Wednesday, Facebook Inc.’s FB 0.22% data scientists and executives saw warning signs of further trouble.

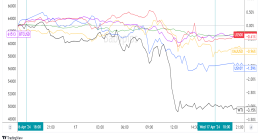

User reports of violent content jumped more than 10-fold from the morning, according to documents viewed by The Wall Street Journal. A tracker for user reports of false news surged to nearly 40,000 reports an hour, about four times recent daily peaks. On Instagram, the company’s popular photo-sharing platform, views skyrocketed for content from authors in “zero trust” countries, reflecting potential efforts at platform manipulation by entities overseas.

Facebook’s platforms were aflame, the documents show. One Instagram presentation, circulated internally and seen by the Journal, was subtitled “Why business as usual isn’t working.”

Online Extremism

Company leaders feared a feedback loop, according to people familiar with the matter, in which the incendiary events in Washington riled up already on-edge social-media users—potentially leading to more strife in real life.

Facebook ultimately decided on a series of actions over the past week that, taken together, amount to its most aggressive interventions against President Trump and his supporters. And they show the company continuing to grapple with how best to police its platforms while still allowing for political discussion.

The issues have consumed Facebook for much of the Trump administration and are likely to persist for the near future, as it navigates competing criticism from some that it does too little to curb problematic content and from others that its moderation efforts veer into censorship.

In the chaotic hours as the Capitol siege unfolded, Facebook executives were wary of stifling political discussion, consistent with Chief Executive Mark Zuckerberg’s longstanding admonition that the company shouldn’t be an arbiter of free speech—and his aversion to a showdown with the president.

“Hang in there everyone,” wrote Chief Technology Officer Mike Schroepfer in a post reviewed by the Journal, asking for patience while the company figured out how best “to allow for peaceful discussion and organizing but not calls for violence.”

“All due respect, but haven’t we had enough time to figure out how to manage discourse without enabling violence?” responded one employee, one of many unhappy responses that together gathered hundreds of likes from colleagues. “We’ve been fueling this fire for a long time and we shouldn’t be surprised that it’s now out of control.”

Chief Technology Officer Mike Schroepfer asked employees for patience last week as Facebook determined how best ‘to allow for peaceful discussion and organizing but not calls for violence.’

Photo: mike blake/Reuters

SHARE YOUR THOUGHTS

How well do you think Facebook managed its response to the attack on the U.S. Capitol? Join the conversation below.

By midafternoon Wednesday, Mr. Zuckerberg and other senior executives had taken their first steps: Two of Mr. Trump’s posts came down, and Facebook privately designated the U.S. a “temporary high-risk location” for political violence, according to documents viewed by the Journal. The designation triggered emergency measures to limit potentially dangerous discourse on its platforms.

Next, Facebook announced it was banning the president for 24 hours. Internally, employees kept pushing, according to posts seen by the Journal.

“You may feel like this is not enough, but it’s worth stepping back and remembering that this is truly unprecedented,” Mr. Shroepfer responded early Thursday morning. “Not sure I know the exact right set of answers but we have been changing and adapting every day—including yesterday.”

Before the open of business Thursday, Mr. Zuckerberg said Facebook would extend its ban of Mr. Trump through at least the inauguration. Later that morning it deleted one of the most active pro-Trump political groups on Facebook, the #WalkAway Campaign, which was cited repeatedly for breaking Facebook’s rules last year but never taken down, according to a person familiar with the situation.

By Monday, Facebook said it would prohibit all content containing the phrase “stop the steal”—a slogan popular among Trump supporters who back his efforts to overturn the election—and that it would keep the emergency measures that it had activated the day of the Capitol assault in place through Inauguration Day.

Mr. Zuckerberg, who has grown more involved in politics over the last four years, has been personally involved in the decisions, people familiar with the matter say.

One person familiar with the discussions said the changes were already being planned but that Wednesday’s events “sped it up by 10x.”

Facebook declined to comment on its internal deliberations over the handling of content related to the riot, which left five people dead.

At a Reuters conference on Monday, Facebook Chief Operating Officer Sheryl Sandberg said the company has no plans to lift its Trump ban. While the Capitol mob had mobilized on social media, Ms. Sandberg said the attack was largely organized on other digital platforms.

“Our enforcement is never perfect, so I’m sure there were still things on Facebook,” said Ms. Sandberg. “I think these events were largely organized on platforms that don’t have our abilities to stop hate, don’t have our standards and don’t have our transparency.”

While niche platforms have surged in popularity among many of the groups associated with the riot, distortions about the election that fed the violence have also been prevalent on mainstream platforms, say analysts including Jared Holt, a visiting research fellow at the Atlantic Council’s Digital Forensic Research Lab who has tracked extremists’ activities related to the election and transition of power.

Facebook long tried to draw a line—with mixed success—between merely provocative content and posts that are likely to lead to real-world harm. The social media-fueled genocide in Myanmar, which Facebook in 2018 said it could have done more to prevent, is perhaps the most stark example of the latter. The company’s decision Wednesday was largely about Mr. Zuckerberg concluding that Mr. Trump crossed that line.

“We believe that the public has a right to the broadest possible access to political speech,” Mr. Zuckerberg posted on Facebook, explaining the decision. “But the current context is now fundamentally different, involving use of our platform to incite violent insurrection against a democratically elected government.”

He said that “the risks of allowing the President to continue to use our service during this period are simply too great.”

Current and former employees said the company’s senior executives appear to be responding to the shifting political winds. “The leadership thinks any price they pay on being tougher on Trump is much less than a few weeks ago, a few months ago,” said one person familiar with the company.

Facebook denied that the coming change in government—with Democratic President-elect Joe Biden set to be sworn in on Jan. 20—affected its handling of the crisis. “That’s not how this happened,” said Facebook spokesman Andy Stone. “The threat of continued violence and civil unrest was at the core of this decision.”

Facebook executives are aware that the company’s decisions will likely affect employee morale, which they track closely due to concerns that unhappiness could harm efforts to find and retain talented employees. Mr. Zuckerberg’s refusal to take down Mr. Trump’s post warning, “When the looting starts, the shooting starts” during last year’s Black Lives Matter demonstrations triggered a virtual walkout and coincided with a nearly 30-point reduction in employee confidence in Facebook’s leadership, according to the company’s internal “pulse” polls. Employee pride in working at the company declined by a similar amount.

Though the company subsequently applied labels to some of Mr. Trump’s posts, neither measure has fully recovered.

Originally designed for countries where Facebook has been used to carry out genocide or incite political bloodshed, many of the techniques deployed this week were first imposed in the U.S. shortly after the Nov. 3 election—then gradually lifted as questions of the vote’s outcome were put to rest.

The changes included a number of algorithmic tweaks, including accelerating the shutdown of comments on threads that were “starting to have too much hate speech or violence & incitement” and recommending fewer pages from what it deemed the “low quality news ecosystem,” according to the internal documents.

Facebook executives had said before the election that they hoped to avoid using the emergency tools at all. They later described doing so as a one-off decision.

On Thursday, a presentation about the new measures produced following the assault on the Capitol defined many as “US2020 Levers, previously rolled back.”

Write to Jeff Horwitz at [email protected] and Deepa Seetharaman at [email protected]

Copyright ©2020 Dow Jones & Company, Inc. All Rights Reserved. 87990cbe856818d5eddac44c7b1cdeb8